An upgrade I made for the merger of

Djangui and

Wooey was to deal with instances where multiple arguments could be passed to a parameter. Djangui is a program that wraps python scripts in a web interface, so forms are dynamically built according to the argparse commands. However, for cases where you have an arbitrary number of arguments to a parameter, such as providing multiple files separated by spaces on the command line, we need slightly more than the standard input. This is complicated by supporting custom widgets that are in 3rd party apps -- we don't want to assume anything about what the widget's output is.

For me, a complete solution required several aspects:

- The ability for the user to arbitrarily add extra fields

- A limit of the number of fields if specified by the script

- A way to clone past jobs and auto-populate multiple selections

Adding extra fields and limiting the number of extra fields

To add extra attributes to a widget that are rendered in HTML is quite easy. You simply add whatever you wish to show up in the widget's attr dictionary as such:

field.widget.attrs.update({'data-attribute-here': 'data-value-here'})

This allows you to then do any work on the front-end with javascript, such as putting in checks for the maximum number of extra fields with code such as:

field.widget.attrs.update({'data-wooey-choice-limit': choice_limit})

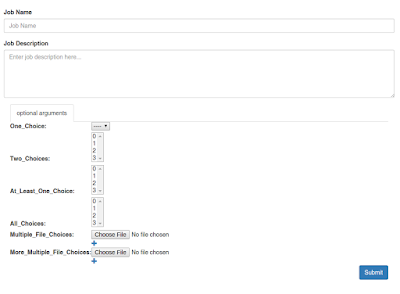

For adding extra fields, this isn't exactly what I want though. This approach will put the attribute on every single widget. To make this clear, this is the final implementation:

You can see there are plus signs for two parameters that support multiple file inputs. If we put that extra attribute on the widget, we would create a + sign for every single widget of the parameter instead of a single button that is "add a new input for this field". To avoid this, we'd have to do a bunch of checks in javascript to see which widget is the last one, etc. That is too complicated to be correct.

To get around this, I chose to wrap the input field with a div. This is accomplished by patching the widget's render method to:

- Get the rendered content

- Wrap that content with an element

This is what it looks like:

WOOEY_MULTI_WIDGET_ATTR = 'data-wooey-multiple'

WOOEY_MULTI_WIDGET_ANCHOR = 'wooey-multi-input'

def mutli_render(render_func, appender_data_dict=None):

def render(name, value, attrs=None):

if not isinstance(values, (list, tuple)):

values = [values]

# The tag is a marker for our javascript to reshuffle the elements. This is because some widgets have complex rendering with multiple fields

return mark_safe('<{tag} {multi_attr}>{widget}</{tag}>'.format(tag='div', multi_attr=WOOEY_MULTI_WIDGET_ATTR,

widget=render_func(name, value, attrs)))

return render

# this is a dict we can put any attributes on the wrapping class with

appender_data_dict = {}

field.widget.render = mutli_render(field.widget.render, appender_data_dict=appender_data_dict)

Now we can look for the data-wooey-multiple attribute, which corresponds to a div containing all inputs for a given field. This lets us easily count how many copies of the field the user has created to help enforce limit checks, as well as makes it so a single selector can be constructed to handle everything.

Auto-populate multiple initial values

This aspect of this implementation was accomplished by extending my previous approach of overriding the render method. First, I made it so all my initial values would be passed as a list. Given this, it was simple to expand my above render method, into this:

def mutli_render(render_func, appender_data_dict=None):

def render(name, values, attrs=None):

if not isinstance(values, (list, tuple)):

values = [values]

# The tag is a marker for our javascript to reshuffle the elements. This is because some widgets have complex rendering with multiple fields

pieces = ['<{tag} {multi_attr}>{widget}</{tag}>'.format(tag='div', multi_attr=WOOEY_MULTI_WIDGET_ATTR,

widget=render_func(name, value, attrs)) for value in values]

# we add a final piece that is our button to click for adding. It's useful to have it here instead of the template so we don't

# have to reverse-engineer who goes with what

# build the attribute dict

data_attrs = flatatt(appender_data_dict if appender_data_dict is not None else {})

pieces.append(format_html('', anchor=WOOEY_MULTI_WIDGET_ANCHOR

,data=data_attrs))

return mark_safe('\n'.join(pieces))

return render

Unfortunately, it wasn't that simple. By default, a field's clean method takes the first element of a list as its output, which clearly will fail here since we can have multiple items. Additionally, a widget's value_from_datadict method will usually return the first element in the list as well. To avoid subclassing every single widget to correct this behavior, I once again took my monkey-patch/decorator hybrid method to override the clean and value_from_datadict methods:

def multi_value_from_datadict(func):

def value_from_datadict(data, files, name):

return [func(QueryDict('{name}={value}'.format(name=name, value=i)), files, name) for i in data.getlist(name)]

return value_from_datadict

def multi_value_clean(func):

def clean(*args, **kwargs):

args = list(args)

values = args[0]

ret = []

for value in values:

value_args = args

value_args[0] = value

ret.append(func(*value_args, **kwargs))

return ret

return clean

field.widget.value_from_datadict = multi_value_from_datadict(field.widget.value_from_datadict)

field.clean = multi_value_clean(field.clean)

Summary

The above approach allows the user to add widgets to a given field at will, with the option to limit how many can be added. It also has the very useful attributes of:

- It will work on most field widgets (if they for some reason changed the render method's expected arguments, it may fail).

- It utilizes existing clean methods to perform validations.

- It will generate correct errors for each field without any extra work.

The full implementation of this can be seen at the merger branch of Wooey and Djangui,

here.

I'm not sure if my method of overriding the function is best called a monkey-patch or a decorator, it seems a bit of both to me. If anyone knows the correct term, let me know.